Playing video games or “Gaming” is a popular trend transcending ages, cultures, professions, and social backgrounds. Previously, medical experts advocated outdoor games as a means to improve health. While the benefits of physical games remain undeniable, today, video gaming is said to be equally beneficial for mental health and cognition.

Research on video gaming conducted by the University of Rochester unveiled surprising results about the gaming trend with millions of gamers under its spell. From a neurological viewpoint, video gaming positively impacts one’s:

- Contrast sensitivity

- Collaborative skills

- Reflex responses

- Eye and hand coordination

- Memory power

While such aspects put a positive spin on video gaming, Gaming Moderation is the ultimate weapon that fosters a conducive online gaming atmosphere.

Gaming Moderation: What Is It All About?

Gaming Moderation is the digital process of screening and filtering all user-generated gaming content to ensure compliance with your gaming policies and regulations. Gaming content moderators sift through volumes of images, texts, and audio messages to filter out anything that compromises the quality and safety of your online gaming site.

In a nutshell, Gaming Moderation is imperative in creating safe, respectful, and enjoyable gaming environments for users of all ages.

Why is Gaming Behavior Moderation Important?

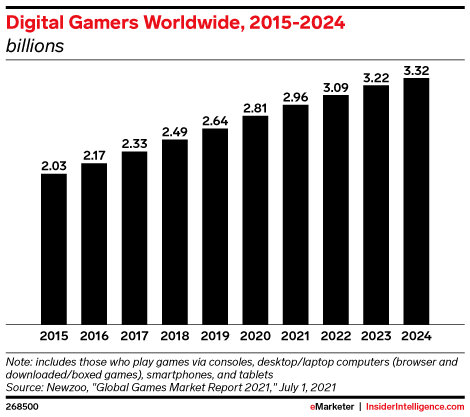

The online gaming world is an ever-expanding universe. With over 500 million gamers playing online, there is a non-stop exchange of interactions and content.

Source: eMarketer

Online gaming is not only about playing a game. Gamers take on aliases, characters, and personalities and “live” in their own gaming communities. Hence, anything that happens within these communities can impact the players’ lives, perceptions, and actions.

Without content moderation, players could face verbal abuse, derogatory comments, and hostility. A simple discussion can quickly escalate into a verbal battle that destroys the harmony and sportsmanship within the gaming community. Such unsavory incidents can render online gaming unsafe and demotivating for prospective players. Game chat moderation can, however, nip such issues in the bud.

Gaming site moderation is a continuous task. Gaming content moderators have their work cut out for them because content moderation is no walk in the park. User-generated content will add up as gaming activity increases with the number of players. Nevertheless, gaming content must be moderated regardless of the images, chats, and posts users add to your games.

The Role of AI & Humans in Gaming Moderation

There are two ways to Gaming Moderation:

1. Human intervention

2. AI-based moderation

Content moderation with Artificial Intelligence has one primary goal: To remove hateful, harmful, and malicious content to ensure a safe and fair gaming environment. Artificial Intelligence is, without a doubt, a potent tool. In Gaming Moderation, AI-powered automated tools use intelligent algorithms, in-built knowledge, and Natural Language Processing (NLP) to process content and filter out abusive players. Without AI, it would be impossible to moderate the amount of gaming content generated every day.

However, even AI has its limitations. While it can distinguish good content from bad, what AI cannot do is identify sarcastic comments and content masked with harmful intent. Here’s where human intelligence makes itself indispensable. Gaming content moderators can sift through volumes of user-generated content, review flagged content, etc., to root out such malicious comments that mar your site’s reputation.

But this task alone would require enormous effort and time.

Basically, what one can do, the other cannot, and vice versa. These differences set the stage for an ongoing debate about AI-driven content moderation versus human moderation.

Let’s inspect how one differs from the other.

AI-Moderation Vs. Human Moderation: The 5 Key Factors

1. The Expenses

Having a team of content moderators or investing in AI-driven content moderation tools will cost you. The stark difference here is that AI tools are a one-time investment as opposed to maintaining a moderation team where your expenses will likely increase with the volume of content.

AI moderation, on the other hand, requires only a small team and functions round-the-clock without breaks. Hence you also gain quick ROI for your efforts.

2. The Quality

When it comes to moderation quality, human moderators win hands down. Human moderators are innately equipped to judge the intention behind sarcastic, veiled remarks, masked images, and phrases. Artificial Intelligence is yet to beat us in this aspect. AI moderation hits major blindspots in this context since it lacks the technical nuances to make a qualitative judgment.

3. The Context

Here again, AI-based moderation does not fully understand how the content is used contextually and fails to differentiate between harmful and safe ranges. Human moderators have the upper hand in making context-related decisions. Moreover, moderation issues related to cultural and language context are effectively eliminated with gaming content moderators.

4. The Scalability

AI is unbeatable in terms of processing massive content volumes. The more advanced the AI tool, the faster and more content it can moderate. While there is no doubting the thoroughness of human moderation, it is highly unlikely that even a massive team can keep up with the amount of content generated every minute. Furthermore, AI tools can effortlessly handle data across multiple channels in real time, which is a considerable advantage in this digital scenario.

5. The Ethics

Algorithms are mere extensions of the digital world. They cannot think or decide for themselves. Instead, they are trained to do so on existing data sets. Hence it should come as no surprise that AI moderation can lead to biased algorithms. In this context, gaming content moderators may have the advantage of being able to assess context. However, human moderators are likely to be swayed by their own biases and preconceptions, which may reflect in their moderation decisions.

So, who wins- AI or human moderation? The answer is both! Successful gaming site moderation relies on deriving the best of both worlds. It is the only pragmatic way to handle problematic content derived in real-time.

Also Read: Top 8 AI Films of the 20th & 21st Centuries!

5 Avenues Where AI Gaming Moderation Requires Human Judgement

AI and human moderation must work in tandem. AI-driven algorithms handle most real-time traffic, filter the content, and forward it to gaming content moderators. From this point on, human moderators use their innate judging capabilities to further ascertain if the content is gaming-appropriate. This combined approach is multi-beneficial. It is:

- More productive.

- Less biased

- More accurate

- Cost-effective

- More practical

Here are five avenues of gaming moderation that require both AI intelligence and the human touch.

1. Live messages

Players are constantly chatting with each other during matches or team games. Just like how they encourage one another or devise strategies during a football or baseball match, in-game chats allow gamers to express their opinions. Live chats are vulnerable avenues where opinions may be offensive or controversial.

2. Discussions

Forum discussions are very similar to chats. While voice chat is the most preferred medium in forum discussions, players may share images, videos, and audio clippings. Like live chats, forum discussions need constant monitoring and moderation by AI and human moderators.

3. Music

Here again, players can upload customized or shared music or bizarre sounds that may not go down well with their co-gamers.

4. Gaming characters

One of the biggest attractions of online gaming is the gamer’s freedom to create characters, profiles, and even backstories to make the game more interesting and engaging. Players can misuse this freedom to develop problematic characters entirely out of line with the spirit of the game.

5. Live or recorded videos

Players intending to create trouble can misuse gaming sites to share videos or live streams featuring highly controversial or explicit content like violence, hate speech, suicides, or sexually explicit content. Such content can have unimaginable consequences for individual players, the gaming community, and society at large.

Such scenarios can occur at any given moment, causing a big impact on the gaming society. It can damage your site’s reputation, force players to leave, or even land you in legal trouble.

However, with human moderators and AI, such unsavory and unexpected incidents can be effectively prevented. If AI models fail to identify inappropriate content, human moderators can intervene and take the necessary steps to prevent the issue from escalating.

Stay Safe With Opporture’s AI-powered Game Moderation

It will be a while before AI-powered gaming moderation can operate flawlessly on its own. For now, it needs the human touch to handle intricate problems and complexities with user-generated content. Opporture’s content moderation services will ensure compliance with your community guidelines while also moderating gaming behaviour to make your gaming site safe and enjoyable for your users.

Call us to try a free demo!