Artificial Intelligence is hogging the limelight. This groundbreaking technology has immense potential waiting to be unleashed across diverse industries and verticals. AI’s capacity for pattern recognition and consequence prediction can be leveraged for content moderation, especially on social media.

Why Do We Need Content Moderation for Social Media?

More than half the world’s population is on social media. Online platforms like Twitter, Facebook, Instagram, TikTok, YouTube, and LinkedIn welcome people irrespective of race, color, creed, or religion. Age, of course, is a criterion, but that hasn’t stopped parents from creating special accounts for their children. Even pets have millions of followers on Instagram and Facebook.

Social media platforms allow users to share videos, posts, and audios that cover almost every topic under the sun. They are free to express opinions and feelings and even share an hourly account of what they are doing and where they are. Businesses use these platforms to establish an online presence, garner followers and entice them into becoming customers.

These pulsing centers of digital activity also have a dark side.

Statistics show that nearly 38% or 4 out of every 10 people face offensive online behavior from people who hide behind nonsensical usernames and fake ids.

Cyberbullies and digital miscreants are misusing social media platforms.

This unsavory scenario underlines the pressing need for powerful content moderation strategies. At this point, only AI and ML (machine learning) can tackle this issue head-on.

How Does AI-powered Content Moderation Work for Social Media?

Content moderation is the process of monitoring and managing content pouring in from various social media platforms. The main aim of using content moderation for social media is to identify and remove inappropriate content and make the platform safe for all ages.

Manual moderation is almost unthinkable with the amount of content uploaded every second of every minute. AI-driven content moderation, on the other hand, automated the entire process. It meticulously reviews content, identifies objectionable material, and forwards the revised content for approval.

This systematic process makes the content visible to all users, removes it from the user’s account, or even blocks unruly users from using their accounts. Sometimes, users who post unwanted content are let off with a mild warning.

As far as social media is concerned, content moderation happens in two ways:

- Reviewing and approval of content after it is uploaded by the users.

- Moderation of content before it is streamed live.

The types of content moderation generally used to filter spam and make platforms clean and usable for everyone are:

- Post-moderation

- Pre-moderation

- Reactive moderation

- User-only moderation

- Automated or Algorithmic moderation

Of these, automated moderation is the most advanced because it is AI-driven. In automated moderation, ML algorithms detect inappropriate content from millions of posts uploaded every single day. These ML algorithms are trained to detect unsavory images, videos, audio, and texts. What it cannot accurately interpret, however, is subtle or nuanced messages of hate, obscenity, bias, or misinformation.

Most often, social media platforms use content moderation tools trained on social media postings, web pages, and Natural Language Processing from various communities. This annotated data empowers these tools to detect abusive content in the communication taking place within various communities.

Types of Data Moderated on Social Media Platforms

Social media content moderation covers various types of data, such as:

Text moderation

The volume of text content generated on social medial platforms exceeds the number of images and videos shared by users. Since the text covers a multitude of languages from all over the world, content moderation requires Natural Language Processing techniques to moderate textual content.

Image moderation

AI image recognition requires more sophistication for automated image detection and identification. In images, ML algorithms use Computer Vision to identify objects or characteristics like nudity, weapons, and logos.

Video moderation

Generative Adversarial Networks, or GANs, are used to identify images or videos manipulated with malicious intent. These ML models can also detect videos with fictional characters, actions, and deep fakes.

More than a decade has passed since social media came into existence, but the need for content moderation is prolific now more than ever. If it is not implemented with a robust hand, it may be too late to prevent the repercussions when things go out of hand.

5 Reasons Why Content Moderation is a Must For Social Media Platforms

1. To maintain a safe online environment for users.

Every social media platform is responsible for protecting its users from any content that instigates hate, crimes, untoward behavior, cyberbullying, and misinformation. Content moderation significantly reduces such risks by identifying and eliminating damaging content off the platform.

2. To maintain a harmonious user relationship.

Content moderation bridges the gap between moderators and users. Users can share company, brand, or product-related feedback directly with the moderators. These insights help businesses improve their services and maintain a harmonious relationship with their customers.

3. To ensure safe and user-friendly communities.

Social media platforms are also virtual communities, and like any other community, they require decorum to keep it safe and welcoming for everyone. Moderation helps keep an eye on non-compliant users and ensures positivity and inclusivity.

4. To prevent the spread of false information.

Anything can become “trending” or “viral” on social media platforms, and misleading information is at the top of the list. Such information in videos, texts, or images can spread like wildfire as users share it on their profiles. Content moderation curbs the spread of false information inside the community.

5. To regulate the live streaming of videos.

Many people do not stop to think twice about misusing live-streaming technology to gain personal attention or put others into embarrassing situations. Many have even tried to stream dangerous or sensitive videos on their social media handles. Only AI-powered content moderation can help curb such nonsense and ensure that live streaming is used for the right reasons.

Social media content moderation is the best and most pragmatic solution to regulate content on these digital platforms. If not, the unfiltered content can severely damage the person, business, and the platform’s reputation.

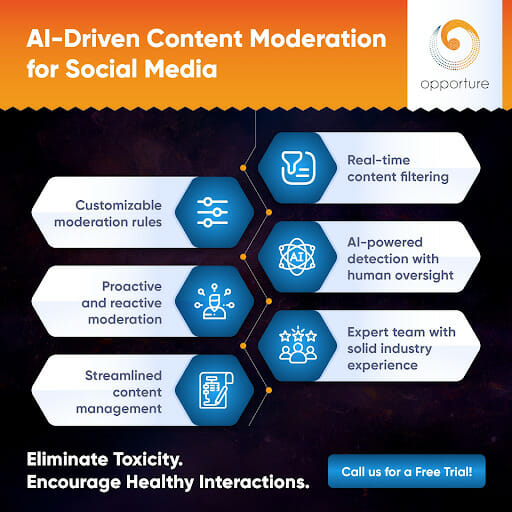

Social Media Content Moderation by Opporture: The Most Reputed Content Moderation Company in the USA

Social media is a lifeline for many businesses across North America. At Opporture, we use the most advanced ML algorithms, AI models, and an expert team to offer well-rounded content moderation services. As a leading content moderation company, we work with many businesses nationwide across various industry sectors.

Do you want to sign up for our services? Call us for a free trial.