There are probably more than a dozen movies where AI-powered robots read the human mind, obey their human master, and end up ruling the world. But that’s just fiction. With AI gaining traction at lightning speed, can this advanced technology read our minds in the real world?

The answer is “yes” AI can read your mind, but only if you are hooked up to an fMRI machine and only if it is trained to process visual information. How is this possible? Read on, and you will be astonished at how science has advanced to unimaginable levels.

Are Bots Reading Our Minds?

Conspiracy theories and AI “alarmists” have repeatedly been warning us about one thing: AI machines and bots will take over the human race the day they learn to read our minds.

Now, thanks to researchers Yu Tagaki and Shinji Yoshimoto from Osaka University, Japan, the bots have successfully reconstructed high-resolution images by reading human brain activity.

So, what happened?

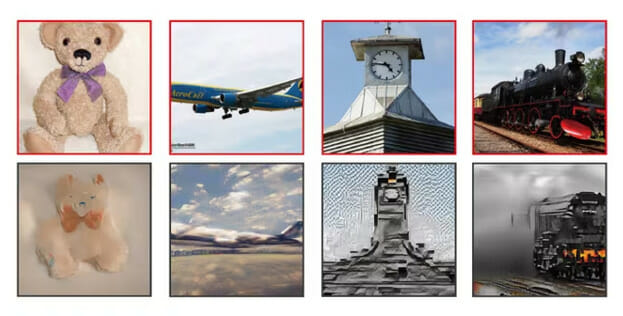

Researchers used the popular Stable Diffusion, a deep learning model, to use data from fMRI scans and translate the images in people’s minds into AI reconstructions. The new algorithm drew roughly a thousand images by taking prompts and cues from fMRI brain scans. The images that included a teddy bear and an airplane were about 80% accurate to the images that the test subjects were thinking in their minds.

View the original image source here

Amazing, isn’t it? What made this possible? If you’ve noticed, we’ve repeatedly used the term “fMRI scan.” This entire research and its outputs were made possible by this highly advanced machine. The interesting question is: How?

Deploying the fMRI: Reconstructing of Visual Images From Brain Activity

fMRI, or Functional Magnetic Resonance Imaging, is one of the most advanced tools for understanding human thinking. The fMRI scanner is a technological marvel capable of producing mesmerizing images by scanning a person’s various mental tasks. The resulting colorful photos show the person’s brain in action as they think about various images.

However, this is not the first time researchers have tried this experiment. Several trials have been conducted using generative models that need to be trained from scratch using the fMRI data. This task is more herculean than it sounds. But there had to be a way to make this research easier. The solution? Diffusion models!

What are Diffusion Models or DMs?

DMs are Deep Generative Models capable of achieving state-of-the-art performance in image-related tasks. But, as always, technology has evolved, and now we have LDMs or Latent Diffusion Models, the most recent breakthrough. LDMs further reduce computational expenses using the latent space generated by their autoencoding components.

If we analyze tech history, we can understand that for a long time, neuroscience has inspired Computer Vision. This inspiration has enabled artificial systems to see the world through the eyes of humans. The advances in neuroscience and AI have made it possible to directly compare the latent representation of the human brain and the architecture of neural networks.

Hence, it should come as no surprise that the Osaka University researchers were able to create a gallery of images by combining AI-powered fMRI, Diffusion Models, and Computer Vision.

Other efforts, like reconstructing visual images from brain activity and analyzing computational processes of biological and artificial systems, have also contributed to this research.

But such tasks are easier said than done. Reconstructing images from brain activity is challenging as the nature of brain representations is not commonly known. Also, the available sample sizes of brain data are relatively very small for the researchers to use them conclusively. Let’s now circle back to the Osaka University research.

Also Read: AI Democratization & Emerging Trends for 2023

What Does The Bot Do When It Reads Human Mind?

According to the researchers, the AI model draws information from the areas of the brain (primarily the occipital and frontal lobes) involved in picture processing. Besides, fMRI can detect blood flow to the active brain regions.

During cognitive or emotional behavior, fMRI can detect oxygen molecules, enabling sensors to identify where our neurons work the hardest in the brain and consume the most oxygen.

The researchers used four people for the experiment. Each one of them viewed a collection of 10,000 images. The AI model first generates these images as noise (imagine something like television static). The model then builds on this by assigning unique characteristics after comparing them to the images it was trained on.

For training the AI, the researchers showed about 10,000 visuals to each participant while they were inside the fMRI scanner. This process was repeated thrice, and the MRI data that was produced by the scanner were transferred to a computer to train it on how each participant’s brain processed and analyzed thes images.

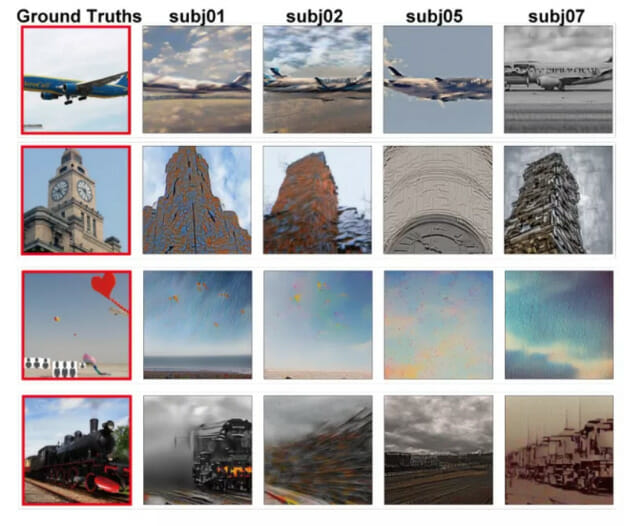

A surprise finding was that the AI model read some of the participant’s brain activity better than it did for the others. Even more interesting is that despite the differences, there were significant similarities observed between the resulting images and what was displayed to the participants. For example, even the objects, color schemes, and image compositions were quite similar. Take a look at these images.

View the original image source here

Real-life Application of the Diffusion Model

A technological breakthrough in this proportion has left its researchers spellbound. In his interview to Newsweek, Takagi, who works as an assistant professor at Osaka University, said that the Diffusion Model used for the research was originally not created to understand the human brain. However, the image-generating AI model was able to predict brain activity remarkably well, which indicates that it can be used to reconstruct visual experiences from the brain.

According to Takagi, this technique may, in the future, be used to construct images directly from a person’s imagination. His explanation for this theory stems from the fact that all visual information captured by the retina is processed in the occipital lobe in an area called the “visual cortex.” Since the same region is activated even when we imagine images, it may be possible to use this technique to analyze brain activity while we imagine things.

However, as exciting as this sounds, we still have a long way to go before these plans come to fruition. There’s a lot of research to be done before we can accurately ascertain how to decode such activity. Nevertheless, Tagaki said that this tech advancement could potentially be used to develop interfaces that involved brain-machine collaborations for creative and clinical contexts.

Similar Researches That Demonstrate AI’s Capabilities

Takagi and Yoshimoto’s research has inspired many similar kinds of research, especially in the field of medicine.

Research conducted recently in November 2022 used the technology to analyze non-verbal brainwaves in patients who have paralysis and convert them into coherent sentences in real time.

The mind-reading AI model demonstrates that it can analyze the brain activity in a person as they spell words phonetically to construct full phrases. Researchers from the University of California believe that paralyzed people who have lost the ability to write or speak may be able to communicate through their neuroprosthesis speaking device.

This device tracked volunteers’ brain activity while they phonetically pronounced each letter to form phrases from a 1,152-word lexicon with an average character error rate of 6.13 %.

Winding Up

At this point, we are only at the cusp of such major technological breakthroughs. Hence, generating images with AI models that analyze brain activity requires time and money. There’s much more work to be done. However, the study clearly demonstrates both the similarities as well as the differences in the ways the human brain and an AI-powered machine interpret the world.

As Takagi said, their work highlights the combined power of artificial intelligence and neuroscience and provides insights into how the two may work hand-in-hand in future.

Opporture offers comprehensive AI model training services to bridge the gap between AI and neuroscience. Find out how this leading AI firm helps businesses across industries integrate AI. Contact today!